The American Art Collaborative (AAC) is a consortium of 14 art museums in the United States committed to establishing a critical mass of linked open data (LOD) on the semantic web. The Collaborative was funded from 2014-2017 by grants from the Andrew W. Mellon Foundation and the Institute of Museum and Library Services.

What is Linked Open Data (LOD)?

In computing, Linked Data describes a method of publishing structured data so that it can be interlinked and therefore useful in web implementations. Tim Berners-Lee, director of the World Wide Web Consortium (W3C), coined the term. Linked Open Data (LOD) refers to data that is made available for public use via Linked Data.

About the American Art Collaborative

The Collaborative believes that LOD offers rich potential to increase the understanding of art by expanding access to cultural holdings, by deepening research connections for scholars and curators, and by creating public interfaces for students, teachers, and museum visitors. AAC members are committed to learn together about LOD, to identify best practices for publishing museum data as LOD, and to explore applications that will help scholars, educators, and the public. AAC is committed to sharing best practices, guidelines, and lessons-learned with the broader museum, archives, and library community, building a network of practitioners to contribute quality information about works of art in their collections to the linked open data cloud.

Goals and Objectives

The American Art Collaborative is dedicated to creating a diverse critical mass of LOD on the Web on the subject of American art by putting the collections of the participating museums in the cloud and tagging this data as LOD. This will exponentially enhance the access, linking, and sharing of information about American art in a way that transcends what is currently possible with structured data.

The goals of the initiative are to simplify access to digital information across museum collections for both researchers and the public, to create new opportunities for discovery, research and collaboration, to promote creative reuse of data on American art across numerous applications, and to showcase for museums and other scholarly institutions the inherent possibilities of LOD.

Grant Support

Thanks to a planning grant from the Andrew W. Mellon Foundation, a national leadership grant from the Institute of Museum and Library Services, and a second grant from the Andrew W. Mellon Foundation, the AAC was able to convert the American art holdings of partner museums to LOD.

Plans over the grant period are described in detail in the Project Road Map [PDF] created under the Mellon planning grant. The project uses a federated model whereby each AAC member assumes responsibility for updating and maintaining its own data.

The AAC is managed by Eleanor E. Fink, an international art and technology consultant, and founder of AAC.

Members of the AAC

Amon Carter Museum of American Art

Amanda Blake, Director of Education and Library Services

Archives of American Art, Smithsonian Institution

Karen Weiss, Supervisory Information Resources Specialist

Michelle Herman, Digital Experience Manager

Toby Reiter, Information Technology Specialist

Autry Museum of the American West

Rebecca Menendez, Director, Information Services and Technology

Colby College Museum of Art

Charles Butcosk, Digital Projects Developer

Crystal Bridges Museum of American Art

Shane Richey, Digital Media Manager

Dallas Museum of Art (DMA)

Brian Long, Systems Engineer

Brian MacElhose, Collections Database Administrator

Indianapolis Museum of Art (IMA)

Stuart Alter, Director of Technology

Heather Floyd, Software Developer

Samantha Norling, Digital Collections Manager

Thomas Gilcrease Institute of American History and Art

Diana Folsom, Head of Collection Digitization

National Portrait Gallery, Smithsonian Institution

Sue Garton, Data Administrator

National Museum of Wildlife Art

Emily Winters, Registrar

Princeton University Art Museum

Cathryn Goodwin, Manager of Collections Information

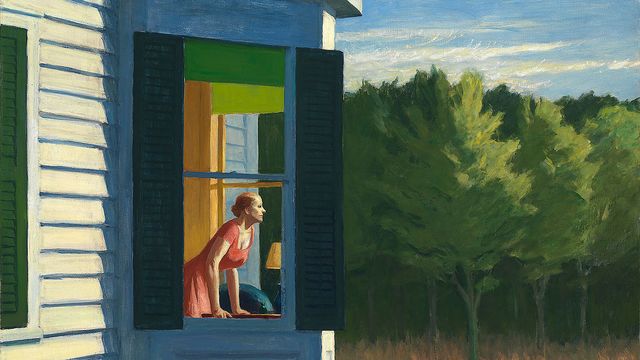

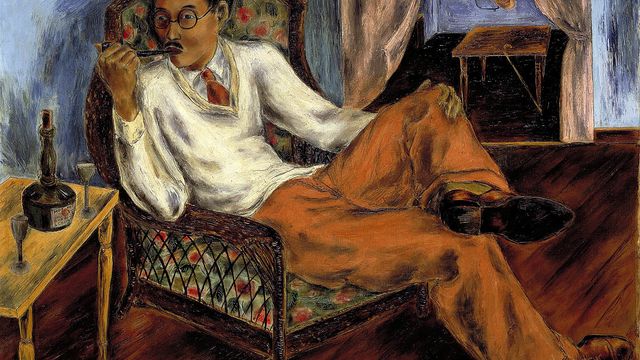

Smithsonian American Art Museum (SAAM)

Rachel Allen, Deputy Director

Sara Snyder, Chief, Media & Technology Office

Richard Brassell, Software Developer

The Walters Art Museum

Will Hays, Assistant Registrar, Data and Images

Yale Center for British Art

Emmanuelle Delmas-Glass, Collections Data Manager

Consultants

Eleanor Fink, who founded the AAC, serves as its manager and the point of contact with AAC members, consultants, and advisers. She plans and executes the meetings, resolve issues, tracks project goals and deliverables, and communicates with practitioners in the field. Fink served for 13 years at Smithsonian and then at the J. Paul Getty Trust, initially as founder of the Getty vocabulary program, as program officer for scholarly resources, and then as director of the Getty Information Institute (GII).

Data modeling coordinator Emmanuelle Delmas-Glass helps AAC members prepare their data and identify data points for mapping to the CIDOC CRM. Delmas-Glass is the Collections Data Manager in the Collections Information & Access Department at Yale Center for British Art, which has been working with the CIDOC CRM for several years.

CIDOC CRM expert Stephen Stead works with USC’s Information Sciences Institute in reviewing the applications of the CRM to data mapping and to provide a hands on workshop and data expertise as needed throughout the 18 month grant period. Stead, from Paveprime Ltd., is a highly qualified expert who helped build and develop the CRM.

University of Southern California, Information Sciences Institute (ISI), with principal support from Pedro Szekely, applies their KARMA data integration tool to convert AAC records to LOD, develop a curation linking tool, and provide hands on workshops on how to map, refresh, and maintain data. ISI is a world leader in research and development of cyber security, advanced information processing, and computer and communications technologies. A unit of the University of Southern California’s Viterbi School of Engineering, ISI is one of the nation’s largest, most successful university affiliated computer research institutes.

Duane Degler, Design for Context (DfC), serves as facilitator to coordinate meetings, develop application specifications with the AAC members, and advise on best practices for using LOD. Kate Blanch, Data Architect, Design for Context, works with the museums to prepare their collections data and address museum-specific issues. DfC specializes in articulating visual and interaction design requirements for web applications, software, and websites with specific interest in leveraging linked data and semantic technologies.

Advisory Council

Robert Sanderson, Senior Semantic Architect, J. Paul Getty Trust

Sanderson’s research focuses on digital libraries, archives, and museums and their interaction via LOD and the web. He brings relevant experience from his participation in the International Image Interoperability Framework and LOD experience with cultural heritage institutions.

Thorny Staples, Director, (retired) of the Office of Research Information Services at the Smithsonian Institution, Office of the Chief Information Officer (OCIO)

Staples’ work has touched almost every area of digital projects, from technical programming to software development, with a focus on research systems in the humanities.

Craig Knoblock, Director of Data Integration, Information Sciences Institute (ISI), USC

Knoblock is an expert in the area of AI and Information Integration. He has worked on a wide range of topics within this area including information extraction, wrapper learning, source modeling, record linkage, mashup construction, and data integration. Craig and his team specialize in research and development of tools that streamline creation of linked data from existing data repositories (e.g. KARMA).

Martin Doerr, Research Director at the Information Systems Laboratory and head of the Centre for Cultural Informatics of the Institute of Computer Science, FORTH

Doerr has been leading the development of systems for knowledge representation and terminology, metadata and content management. His long-standing interdisciplinary work and collaboration with the International Council of Museums on modeling cultural and historical information has resulted in an ISO Standard, ISO 21127:2014, also known as the CIDOC CRM, a core ontology for the purpose of schema integration across institutions.

Vladimir Alexiev, Lead, Data and Ontology Management Group, Ontotext Corp

Alexiev works on semantic data integration, thesaurus/KOS management, ontology and application profile development in a variety of domains, in particular NLP and cultural heritage. He had significant influence on the CRM mapping of the British Museum and YCBA, created the first implementation of CRM FR Search, developed the LOD representation of the Getty vocabularies, and currently works on mapping the Getty Museum data to CRM and on data integration and authorities for the European Holocaust Research Institute.

Partner Meeting – Oct. 2016

The American Art Collaborative convened its project partners, advisors, consultants, and J. Paul Getty Trust staff at the offices of USC’s Information Sciences Institute (ISI) in Marina Del Rey, CA from October 3-5, 2016. This meeting was the second multi-day in-person meeting of all the partners in the collaborative.

In order to provide inspiration, education, and insights for the partners, Monday afternoon and some of Tuesday morning was dedicated to presentations on other projects and uses of Linked Open Data. There are links below to selected presentations:

- Rob Sanderson, The Provenance of Madame Bonnier: Museum Linked Data

- David Newbury, Carnegie Museum of Art: Multiple applications

- Mark Derthick, Bungee View Tag Wall, exploration using the Smithsonian American Art Museum collection

- Sara Snyder, Linked Open Data at SAAM: Past, Present, Future

- Emmanuelle Delmas-Glass, IIIF and Mirador at the YCBA: image based scholarly collaboration and research

- Matthew Lincoln, The Joys and Pains of Using Linked Open Data for Research

Educational Briefing Videos

During the first phase of the American Art Collaborative, under the Mellon Foundation Planning Grant of 2014, the AAC organized a series of “educational briefings”: webinars designed to help AAC members grow their expertise in LOD and related topics. Video recordings and slide decks from those sessions have been shared here, for the benefit of AAC members as well as any other interested parties.

2014 Kickoff Meeting

Linked Data and Tools

Presented to the American Art Collaborative on September 30, 2014 by Pedro Szekely – USC/Information Sciences Institute

Ontologies and the CIDOC CRM

Stephen Stead talks about ontologies, in particular the CIDOC CRM.

The Role of Linked Open Data at Yale Center for British Art

Yale Center for British Art, Emmanuelle Delmas-glass and Matthew Hargraves discuss how Yale Center for British Art converted its data to LOD and how they have seen some research applications.

Video of Emmanuelle Delmas-glass on LOD at Yale Center for British Art

Getty Vocabularies and LOD

Getty Vocabularies, Patricia Harpring and Joan Cobb cover how the Getty Research Institute hopes the vocabularies will be used as LOD and how they converted their data.

Video of Patricia Harpring and Joan Cobb on Getty Vocabularies and LOD

Slides of Patricia Harpring and Joan Cobb on Getty Vocabularies and LOD

International Image Interoperability Framework (IIIF) for Museums

American Art Collaborative Robert Sanderson, presents several LOD use cases that illustrate how images and linked data can play together. Examples are drawn from the British Museum, Yale Center for British Art, the V & A, and the Fitzwilliam Museum.

Video of Robert Sanderson on International Image Interoperability Framework (IIIF) for Museums

DPLA’s objectives, platform and best practices

Tom Johnson, Metadata and Platform Architect, DPLA (Digital Public Library of America) discusses DPLA’s objectives, platform and best practices.

Video of Tom Johnson on DPLA’s objectives, platform and best practices

ResearchSpace

Dominic Oldman, ResearchSpace Principal investigator discusses the goals of ReseachSpace, tools used to create LOD and the aggregation model upon which ResearchSpace is based. He also provides LOD use cases.

Perspectives and Considerations

Duane Degler and Neal Johnson of Design for Context provide an overview of what we’ve learned in the Educational Briefings so far.

Video of Duane Degler and Neal Johnson on Perspectives and Considerations

Slide of Duane Degler and Neal Johnson on Perspectives and Considerations

March 23 – Q&A Session with SI

Question and Answer session for AAC members, with ISI covering publishing open data, use of repositories like GitHub, and data formats.

Europeana and LOD

Antoine Isaac, R&D Director of the Europeana Project, discusses where Europeana is headed, and demonstrates some of their use cases and applications.

Resources

Overview and Recommendations for Good Practices

Overview and Recommendations for Good Practices [PDF 3.6MB]

This guide publication, one of the key products and outcomes of the AAC, is not a starter kit or step-by-step technical manual. Rather, it’s purpose is to share with the museum community what AAC set out to accomplish, how AAC approached LOD, the tools we used, the trials we encountered, the lessons we learned, and our recommendations for good practices for museums interested in joining the LOD community.

Read Selected Highlights from the Recommendations for Good Practices Publication

These recommendations are excerpted from the AAC publication, Overview and Recommendations for Good Practices [PDF]

The following text is meant for museums and other types of cultural-heritage institutions but often uses “museum” to simplify wording. The text is addressed to any staff member in charge of an institution’s data.

At a Glance: Recommendations for Good Practices When Initiating LOD

- Establish Your Digital Image and Data Policies

- Choose Image and Data Licenses That Are Easily Understood

- Plan Your Data Selection

- Recognize That Reconciliation and Standards Are Needed to Make Most Effective Use of LOD

- Choose Ontologies with Collaboration in Mind

- Use a Target Model

- Create an Institutional Identity for URI Root Domains

- Prepare Your Data and Be Sure to Include Unique Identifiers

- Be Aware of Challenges When Exporting Data from Your CIS; Develop an Extraction Script, or API

- If Outsourcing the Mapping and Conversion of Your Data to LOD, Do Not Assume the Contractor Understands How Your Data Functions or What You Intend to Do with It

- Accept That You Cannot Reach 100 Percent Precision, 100 Percent Coverage, 100 Percent Completeness: Start Somewhere, Learn, Correct

- Operationalize the LOD within Your Museum

Linked Open Data FAQs

These FAQ are excerpted from the AAC publication, Overview and Recommendations for Good Practices [PDF]

What is Linked Open Data (LOD)?

In computing, Linked Data describes a method of publishing structured data so that it can be interlinked and therefore useful in web implementations. Tim Berners-Lee, director of the World Wide Web Consortium (W3C), coined the term. Linked Open Data (LOD) refers to data that is made available for public use via Linked Data.

What is the Semantic Web?

“Semantic Web” refers to the vision and set of technologies that would enable identities to be linked semantically via the web so that accurate web searches are possible. To achieve the “semantic glue” that provides context and meaning in documents, W3C’s Resource Description Framework (RDF) is used to tag information, much like Hypertext Markup Language (HTML) is used for publishing on the web.

RDF is a W3C standard model (i.e., web based and web friendly) for interchanging data on the web. RDF breaks down knowledge into discrete pieces, according to rules about the semantics, or meaning, of those pieces, which it represents as a list of statements with three terms: subject, predicate, and object, known as triples.

Each subject, predicate, and object is a Uniform Resource Identifier (URI) or, for the object, a literal value such as a number or American Standard Code for Information Interchange ASCII string. An organization that produces LOD must select one or more ontologies to play the key role of defining the meaning of the terms used in the subject/predicate/object statements. In essence, RDF and the ontology give context and meaning to a statement, and the URIs provide the identifiers for all the entities described and published as LOD, which allows them to be discovered and connected. Approximately 149 billion triples are currently in the LOD cloud.

What are RDF and URI?

As stated above, Resource Description Framework (RDF) is a W3C standard model (i.e., web based and web friendly) for interchanging data on the web. RDF is a way for computers to work with facts and express statements about resources. RDF breaks down knowledge into discrete pieces, according to rules about the semantics, or meaning, of those pieces, which it represents as a list of statements in the form of subject, predicate, and object, known as triples. The format mimics English sentence structure, which accommodates two nouns (the subject and object) and a type of relationship expressed as a verb (the predicate).

Each subject, predicate, and object is a Uniform Resource Identifier (URI) or, for the object, a literal value such as a number or ASCII string. A URI is typically expressed as a Uniform Resource Locator (URL), which provides the location of the identified resource on the web.

What is an ontology?

Simply stated, an ontology is a schema or conceptual framework that gives data meaning. As described by Wikipedia, an ontology in the field of information science is the “representation of entities, ideas, and events, along with their properties and relations, according to a system of categories.”1 An ontology provides a set of definitions for the meaning of URIs used in Linked Data. Ontologies, which are defined using specifications standardized by the W3C, allow the definition of classes, relationships, and properties to be used in a data model.

What is the CIDOC CRM?

CIDOC CRM, the Conceptual Reference Model (CRM) created by the International Committee for Documentation (CIDOC) of the International Council of Museums, is an extensive cultural-heritage ontology containing eighty-two classes and 263 properties, including classes to represent a wide variety of events, concepts, and physical properties. It is recognized as International Organization for Standardization (ISO) 21127:2006. For more information about the CIDOC CRM, visit the website http://www.cidoc-crm.org.

Is all LOD linkable, regardless of which ontology is used?

Yes, all LOD is linkable because the links are between the subject and object entities regardless of the ontology and syntax that are used to model the data. You can therefore link artists in your specific ontology format to the Getty’s Union List of Artist Names (ULAN) or DBpedia, for example, and link places to GeoNames or the Getty Thesaurus of Geographic Names (TGN), and the like. You can also link your object LOD to information about an object from another museum that is in LOD, even if that institution uses a different ontology.

What are a triplestore and SPARQL endpoint?

A triplestore is a purpose-built database for the storage and retrieval of triples (statements in the form of subject, predicate, and object) through semantic queries. Triplestores support the Semantic Protocol and RDF Query Language (SPARQL).

SPARQL is a query language used to search RDF data, and the endpoint is a net- worked interface created to facilitate these queries. An endpoint is used to query a triplestore and deliver results, primarily for researchers with specific queries.

What is GitHub, and is it necessary to use it?

GitHub is a web-based data and code-hosting service. AAC’s GitHub was the central working space for producing LOD. Each participating museum had a separate directory in GitHub in which to store its exported data. As the students working at the Information Sciences Institute (ISI) at University of Southern California (USC), Los Angeles, mapped the data, the AAC museums, consultants, and advisers could track their progress and post comments within each museum’s repository in GitHub. While GitHub was a key component for AAC, it is not necessary to use GitHub to produce LOD.

What is a target model?

A target model is a subset of all your data mapping possibilities. It acts like a road map when mapping data to minimize the guesswork and help provide consistency across your data. You will need to select your ontology or ontologies before creating or using an existing target model.

If you chose the CIDOC CRM, remember that there are different schools of thought about how it should be applied. Your target model should reflect how you wish to apply the CRM.

What is AAC’s target model?

AAC’s target model is a profile of the CIDOC CRM Linked Data model designed to work across many museums and enable functional applications to be built using the model. More specifically, because the AAC target model is standardized, many institutions can use it to publish and share their data with little reworking of data. The AAC target model supports varying levels of completeness, or detail, in the data, as it uses the CIDOC CRM alongside other RDF ontologies available outside the museum community, where needed. It is closely aligned with common controlled vocabularies (currently, the Getty’s Art & Architecture Thesaurus). The AAC target model minimizes the learning curve about LOD modeling for institutions’ staff. It also supports the important concept that the data should be able to travel “round trip,” meaning that the data converted from source systems to Linked Data can be converted back into the format of the original system with no loss of data or change in the level of detail.

The AAC target model currently covers 90 percent of the possibilities the CRM offers, with only 10 percent of the complexity of the full CRM ontology.

What decisions shaped AAC’s target model?

Several challenges influenced the shape of AAC’s target model. Among them were issues of legacy data, which partners could not resolve within the scope of the project; the complexity of the CIDOC CRM, which depends on details that partner data did not include; places where the CRM did not work well with the aim of the web delivery of LOD, so other ontologies need to be incorporated alongside CRM; and a conscious effort to make data mapping basic for the museum community. A full description of AAC’s target model can be found at https://linked.art.

The AAC GitHub link captures the dialogue that influenced AAC’s target model. These discussions can be found within individual partner directories at https://github.com/american-art.

What is Linked Art?

Linked Art describes itself on its website (https://linked.art): “Linked Art is a Community working together to create a shared Model based on Linked Open Data to describe Art. We then implement that model in Software and use it to provide valuable content. It is under active development and we welcome additional partners and collaborators.” Linked Art provides patterns and models that enable cultural-heritage institutions to easily publish their data for event-based digital research projects and non-cultural-heritage developers. It includes the AAC target model and applies it to additional projects, such as those developed by the J. Paul Getty Museum, the Getty Research Institute (Getty Provenance Index), and Pharos, an international consortium of fourteen European and North American art historical photo archives.

What is IIIF?

The International Image Interoperability Framework (IIIF) is a set of agreements for standardized image storage and retrieval. A broad community of cultural-heritage organizations and vendors created IIIF in a collaborative effort to produce an interoperable ecosystem for images. The goals of the project are to:

- Give scholars an unprecedented level of uniform and rich access to image-based resources hosted around the world

- Define a set of common application programming interfaces (APIs) that support interoperability between image repositories and supporting image viewers

- Develop, cultivate, and document shared technologies, such as image servers and web clients, that provide a world-class user experience in viewing, comparing, manipulating, and annotating

For more information about the IIIF, visit the website http://iiif.io.

What open-source tools are available to map, produce, review, and reconcile LOD?

The Open Community Registry of LOD for GLAM (Galleries, Libraries, Archives, Museums) Tools is a good resource. For information, see the spreadsheet https://docs.google.com/spreadsheets/d/1HVFz7p-8Rm3kmDK0apMsrwV_Q0BaDSs…. Some key mapping tools for museums are:

- 3M: The 3M online open-source data mapping system has been jointly developed by the Foundation for Research and Technology–Hellas (FORTH) information systems laboratory in Greece and Delving BV in The Netherlands. It is touted as a tool that allows a community of people to view and share mapping files to increase overall understanding and promote collaboration between the different disciplines necessary to produce quality results. It was expressly designed for making good use of the CIDOC CRM. For more information about 3M, visit the website http://www.ics.forth.gr/isl/index_main.php?l=e&c=721.

- Karma: Karma is an open-source integration tool that enables users to quickly and easily integrate data from a variety of data sources, including databases, spreadsheets, delimited text files, Extensible Markup Language (XML), JavaScript Object Notation (JSON), Keyhole Markup Language (KML), and web APIs. It was developed by professors Craig Knoblock and Pedro Szekely of USC’s Users integrate information by modeling it according to an ontology of their choice using a graphical interface that automates much of the process. Karma learns to recognize the mapping of data to ontology classes and then uses the ontology to propose a model that ties together the classes. Users then interact with the system to adjust the automatically generated model. During the process, users can transform the data as needed to normalize data expressed in different formats and restructure it. Once the model is complete, users can publish the integrated data as RDF or store it in a database. A video explaining how Karma works is available at the website http://karma.isi.edu.

- A data generation tool within Karma applies mappings to the data sets to create the RDF data and load it directly into a

- A mapping validation tool, produced by Design for Context, a consultant for AAC, provides a specification of the precise ontology mapping and a corresponding query that returns the data only if it has been correctly mapped. For more information about the mapping validation tool, see https://review.americanartcollaborative.org.

- A link curation tool, designed and produced by ISI, allows users to review links to other LOD resources such as See https://github.com/american-art/linking.

- IIIF translator tool, produced by ISI, automatically creates IIIF manifests (the format required to run IIIF viewers and applications) from the museum data that the AAC project has mapped to the CIDOC CRM In addition to creating the required metadata, the IIIF translator follows the links for every image provided by the museums, determines the size of each image, and creates a thumbnail of each image as part of the process of creating the IIIF manifests. See https://github.com/american-art/iiif.

Which tools did AAC use?

AAC used ISI’s Karma to map all the data and produce RDF. ISI’s link-curation tool was used to link AAC names to ULAN. Design for Context’s validator tool was used to proof the AAC data for consistency.

What is the difference between an ontology and the Karma mapping tool?

As stated earlier, an ontology is a schema or conceptual framework that gives data meaning. Karma is a tool for mapping data to an ontology and using that mapping to produce RDF.

Does our institution need to have a usage policy in place for our data and images before engaging in LOD?

Developing an institution policy requires time for all the appropriate signatures and authorizations. It is recommended, therefore, that your institution develop a digital usage policy before or as soon as you engage in LOD. In theory, you could produce LOD without including images, but most museums will want to provide public access to its images, so having a usage policy for images as well as for data is important.

What are my options for selecting a license?

When you engage in LOD, you should choose a Creative Commons (CC) license, which enables the right to share, use, and build upon a work. For more information about CC licenses, visit the website https://creativecommons.org/licenses, which outlines various types of licenses that are available. For a better understanding of the choices, see recommendation 2, “Choose Image and Data Licenses That Are Easily Understood,” in part 2 of this guide, “Recommendations for Good Practices”. You may wish to select different licenses for images and data. In some cases, you may even decide to apply different licenses to different images.

Which person in a museum should undertake the mapping of data to LOD?

The staff members involved in mapping your museum’s data to LOD will depend on how your museum is organized and the technical knowledge of the staff. A team effort is ideal. The actual mapping process and use of a tool such as 3M or Karma (http://karma.isi.edu) will require some technical knowledge, which could come from information technology staff. To benefit from the depth of coverage of the CIDOC CRM, curators as well as collection managers and registrars with in-depth knowledge of your museum’s data should be involved.

What technical skills are helpful for staff who are publishing and maintaining LOD?

Knowledge of JavaScript Object Notation for Linked Data (JSON-LD), RDF, RDF databases called triplestores (see FAQ “What are a triplestore and SPARQL end- point?”), ontologies, and web infrastructure is an asset for staff participating in LOD. To make the processes for converting and publishing LOD sustainable over time, the technical team involved should understand scripting pipelines that can manage the conversion and movement of data between repositories.

How can my institution begin long-range planning for LOD?

Developing an institutional policy for publishing data and images and choosing a license would help set the stage for your museum’s LOD initiative. A good example can be seen on the website https://thewalters.org/rights-reproductions.aspx for the Walters Museum of Art, Baltimore, Maryland. The museum’s Policy on Digital Images of Collection Objects, states: “The Walters Art Museum believes that digital images of its collection extend the reach of the museum. To facilitate access and usability, and to bring art and people together for enjoyment, discovery, and learning, we choose to make digital images of works believed to be in the public domain avail- able for use without limitation, rights- and royalty-free.”

Planning what data you would like to convert to LOD and reviewing your institution’s data for completeness would be the next logical steps. See recommendation 3, “Plan Your Data Selection,” in “Recommendations for Good Practices,” which covers planning, exporting your data, and legacy data issues.

It would also be useful to educate yourself about LOD by reviewing the educational briefings, papers, and presentations posted on the AAC website.

What minimum data is needed to begin LOD, and can I add more data in phases?

A museum could choose to begin with the basic descriptions of its objects, some- times called “tombstone data,” and add more data over time. Others may wish to take a project-based approach by using LOD to develop a theme in depth, such as focusing on a certain artist or collection.

If I need to update my data, do I have to remap everything?

If you are using Karma (see http://karma.isi.edu) to map your data, many updates to the data can be made without requiring additional work. The three possible scenarios are:

- The format of your updated data is the same as the data that was published. In other words, you have updates to some of the data values but no additional fields or other changes. In this case, the Karma model that you built will apply directly to the updated data without any additional

- The format of the data has changed for a data set that was previously mapped. In this case, you will need to load the revised data set into You can apply the earlier Karma model to this data, but the model will need to be updated to reflect the changes in the data set. Once this is done, you will need to save the model and apply it to future versions of this data set only if the data values change.

- The format is a new data set that you have not previously modeled in Karma. In this case, you will need to load the new data set into Karma, then construct and save a model of the data

Note that you can save time updating if you prepare a script to automate your work flow for extracting your data before mapping. Scripts would also be helpful for taking the converted data and moving it into the triplestore and other repositories. Scripted methods will minimize the effort a museum must make to incorporate updates into its LOD at routine intervals.

Do I need to link to the Getty vocabularies before I convert our data to LOD?

It is not necessary to link to the Getty vocabularies before converting data to LOD. Should you wish to link to the Getty vocabularies before mapping, please note that the Getty Vocabulary Program’s website contains a quick reference guide.

How do I link to the Getty vocabularies once I am using LOD?

The Getty Vocabulary Program is working with AAC to produce an API that will streamline submission of LOD vocabularies and return the URIs or IDs to the contributing institutions for incorporation into their collection information systems (CISs).

What are some of the LOD resources to consider for linking?

The most common LOD resources today are DBpedia, the Getty vocabularies, GeoNames, and Virtual International Authority File (VIAF).

Once I convert my museum’s data to LOD, can I interconnect it with LOD from other museums?

Yes! The “Linked” part of Linked Open Data is a critical component, but it does not occur automatically. As web pages link to one another by adding in the links to the HTML, so can LOD link to other LOD resources. For example, you can choose to link your data to related resources as mentioned in the FAQ above.

Will my institution lose its identify or authority over usage of our object information with LOD?

The idea of LOD is to make it widely available so that other institutions, scholars, and the public can connect with it or use it. It is thus important to state clearly your institution’s license conditions, if any. The data published as Linked Data from a museum should use a URI that designates the museum’s identity. Anyone using the data will give authority to the institution that is publishing the data.

Approximately how much time does it take to convert museum data to LOD?

The time involved in converting museum data to LOD will largely depend on the ontology or ontologies you choose for mapping, the tool you select for converting the data, who performs the mapping, their familiarity with your data, and their level of expertise in using mapping tools. The Yale Center for British Art estimates that it took about two years to map some fifty thousand objects (paintings, sculpture, prints, drawings, watercolors, and frames). The time included the intellectual mapping, writing/code for the script that does the transformation, and putting in place the triplestore and various other pieces of their digital infrastructure to share their CRM-based RDF dataset.

In the case of the AAC, the mapping was outsourced and corrected at intervals as needed. The AAC grant ran for eighteen months, during which time the participants attended many educational briefings, workshops, and in-person meetings and dedicated time as needed for preparing and extracting their data, proofing data, and performing similar tasks. The average time spent by the AAC participants was 169 hours over the duration of the grant.

What resources will I need to host LOD?

As an entry-level approach, a museum could choose to produce JSON-LD and put it in a web server to be served statically or via another software application that works with that format (the AAC browse demo application uses ElasticSearch). In the case of AAC, the initial recommendation was for each museum either to have its own server that could support a triplestore/SPARQL endpoint or form a hub and share a SPARQL endpoint (see FAQ, “What are a triplestore and SPARQL endpoint?”).

During the production phase of AAC, ISI hosted the data using the SPARQL server Apache Jena Fuseki 2+, which is free; for details, see https://jena.apache.org/documentation/fuseki2/index.html. Note that an institution could also use a cloud-computing solution and would therefore be renting space, not requiring any hardware.

How do options for hosting LOD influence how it is accessed and used?

Once the data is generated, people will want to access the data in several ways. One is via a SPARQL endpoint, through which a developer can run Linked Data queries directly against the LOD graph. This function is useful for semantic developers who want to ask specific research questions or build live applications against the data, but it is unreliable and expensive to maintain.

Another possibility for hosting and accessing the data is via a dump, providing an easy, bulk download for all the generated triples. This would be useful for researchers or developers who want to build an application or run a research project using large amounts of the data. The bulk download is inexpensive and easy to host, but it limits access to developers who can not only write SPARQL but also set up their own triplestore.

Finally, the data can be presented via an API, so that developers can then use a common set of functions that request and receive responses via Hypertext Transfer Protocol (HTTP) such as GET and POST that provides Linked Data documents (typically, one document per entity), which are specific, curated subsets of the data. Creating an API is the easiest way for non-semantic developers to work with the data. But each document is only a subset of data, so if the research question or application you want to describe is different from the one that the data curator assumed, the result can be disappointing. The data presented for an entity can also leave out some data that might be connected to the entity in the graph—there is no guarantee that all the triples are available in that specific view.

What did AAC’s browse demo set out to achieve?

The browse application aimed to provide a way for users—including museum staff, scholars, and eventually public art enthusiasts—to engage with the data from multiple institutions and see benefits that can arise only from the way the data is linked.

The AAC partners wanted an application that allows users to find connections between works that come to the surface by the links in the data. The goal is not to search for a specific item of interest. Rather, it is to move from item to item based on the compelling—and sometimes unexpected—relationships between them.

Serendipity, discovery, and rich relationships were desired to illustrate the value of using a Linked Data approach.

The AAC produced a browse demo in part to help the partner institutions see what was possible given the unique links that could be established across the works of the partner institutions. Owing to limitations in the amounts of data that could be provided, the initial demonstration version does not offer all the richness we envision with LOD, but it points the way to future capabilities.

What challenges does LOD present in designing a browse function?

The main challenges that arise when creating an application to browse LOD data are the same ones that ensue when producing other types of applications. Is the data you want to use available within the source systems, and does it have a sufficient quality to be used by computers? Sometimes, computer applications require specific formats that are different from the original, human-readable formats of the data—they need machine-readable dates, for example, or dimensions in which the numbers and units of measure are in separate fields. Another challenge is making sure, when there are multiple values for a data item (such as the title of an artwork), that the computer knows which title is the preferred title, to be used by default.

Data in source systems carry a lot of museum-specialized assumptions, rules, and norms that need to be checked by subject specialists in the museum. LOD itself, as a data format, requires an understanding of the technical syntax on the part of the people who work with it. The browse application helps museum staff see their data in a familiar, human-readable way.

The technical environment of the triplestore may not be sufficiently robust for use in real time. AAC’s approach was to use the triplestore for complex, specialized analysis and at the same time export the RDF triples through a conversion pro- cess that produced JSON-LD. This allows for simpler processing that supports high-performance, scalable day-to-day use.

As new LOD becomes available, how do I learn about and connect to it?

This “discovery” challenge is a recognized issue within the cultural-heritage community, and standards-based solutions are emerging. In the meantime, the LOD for Libraries, Archives, and Museums (LODLAM) community is an excellent source as new data sets become available.

What do I tell my administration about LOD to convince them to support it?

- The internet is undergoing another revolution, with Linked Data formats increasingly being used as the underlying lingua franca for web

- The internet is changing from an internet of documents to the internet of knowledge!

- Until now, when people have searched the internet, they have been presented with an array of hyperlinks to potentially relevant pages. The researcher must review all the links to determine which are relevant to the search at hand.

- A new way to publish information is called Linked Open Data (LOD), which precisely links and interconnects information so that searches are direct, accurate, and immediate. The links contain expressions about why two things are linked, and the meaning of the relationships between them, stated in ways that computers as well as humans can

- LOD uses a markup language called Resource Description Framework (RDF) that, when combined with an ontology, interconnects concepts (people, places, events, and things). The result is that a search connects to the exact concept being sought and avoids the “noise” that sometimes confuses online searching.

- LOD is making headway in the commercial, communications, and publishing worlds. Google, Facebook, the New York Times, US government agencies, Defense Advanced Research Projects Agency (DARPA), and many other institutions are implementing The European Union is building bridges across its libraries, archives, and museums using the digital platform Europeana, the Linked Open Data Initiative of the Europeana Foundation, The Netherlands.

- LOD is here to stay. Its flexible approach to creating meaningful links is the way of the future for data

- The benefits of LOD for museums are huge: LOD could connect data about one artist, for example, across all museums. Millions of people researching that artist would discover who has what art by that artist and where. LOD will increase museum visibility; LOD will reveal relationships among works of art because it will make connections among hundreds of related works; By linking concepts such as events, dates, people, and places across all domains, LOD will expose new information about a work of It will thus boost research that will lead to new discoveries; By its nature, LOD is a collaborative platform that museums can use to deepen audience engagement. Like Wikipedia, LOD provides an opportunity for the public to participate and help supply information (note that unlike Wikipedia, LOD provides no editing capability, but it is possible to build an application on top of Linked Data that would allow people to contribute suggested changes).

- In summary, LOD leverages the power of digitization.

What are some of the unique features that can be achieved with LOD over traditional research?

We are all looking for the concrete performance of LOD that illustrates its value. As more data becomes available as LOD, it will be possible to demonstrate the benefits of being able to search across several collections; have cross-domain access; create new opportunities that focus on its collaborative structure, and the like. One feature of LOD that has already intrigued art historians is the graphical display of LOD that can point to interconnections of time and place, for example, all the artists associated with a certain café in Paris during a specific period. The capability of LOD to produce graphical networks or connections can lead to new observations and conclusions.2

What LOD museum projects are under way in the cultural-heritage domain?

In addition to the LOD Initiative of the American Art Collaborative (AAC), some current projects engaged in LOD are the Arachne Project, Cologne; Arches, a collaboration between the Getty Conservation Institute, Los Angeles, and the World Monuments Fund, New York; Art Tracks, Carnegie Museum of Art, Pittsburgh, Pennsylvania; Canadian Heritage Information Network (CHIN) of the Department of Canadian Heritage; CLAROS (Classical Art Research Online Research Services), a federation led by the University of Oxford; Finnish National Gallery, Helsinki; Germanische Nationalmuseum, Nuremberg; the Getty Provenance

Index (GPI) and Getty Vocabulary Program, Getty Research Institute, Los Angeles; Pharos, the International Consortium of Photo Archives; ResearchSpace located in the British Museum, London; and Yale Center for British Art, Yale University, New Haven, Connecticut.

How do I join AAC?

The AAC has an ongoing interest in helping the broad museum community engage in LOD. Owing to fixed grant funding, however, we have had to limit the number of institutions involved thus far. The good news is that we are providing guidelines for the approaches, practices, and many of the tools that museums can utilize to produce LOD. We plan to seek additional funding to expand application of LOD beyond the subject of American art and produce tools that will particularly help small museums to explore LOD. As additional museums produce LOD, we hope that those who are implementing it will contact the AAC (via Eleanorfink@earthlink.net) to discuss opportunities to link or interconnect data and further demonstrate the value of LOD.

Notes

- Wikipedia, s. , “Ontology (information science),” last modified October 9, 2017, https://en.wikipedia. org/wiki/Ontology_(information_science).

- Paul B. Jaskot, “Digital Art History: Old Problems, New Debates, and Critical Potentials” (keynote address for symposium Art History in Digital Dimensions, University of Maryland, College Park, and Washington, DC, October 19–21, 2016).

Working with the Karma data integration tool

Featured Data Integration Tool: Karma

Karma is an open source integration tool that enables users to integrate data from a variety of sources including databases, spreadsheets, delimited text files, XML, JSON, KML and Web APIs. Users integrate information by modeling it according to an ontology of their choice using a graphical user interface that automates much of the process. Karma learns to recognize the mapping of data to ontology classes and then uses the ontology to propose a model that ties together these classes. Users then interact with the system to adjust the automatically generated model. During this process, users can transform the data as needed to normalize data expressed in different formats and to restructure it. Once the model is complete, users can published the integrated data as RDF or store it in a database.

Visit the Karma page on ISI’s website for more information

Mapping to the CIDOC Conceptual Reference Model (CRM) using Karma

One of the key decisions the AAC made during the Mellon planning grant was agreeing to the use of the CIDOC CRM as the primary ontology. An ontology is a formal naming and definition of types and properties that expresses the relationship about things. The choice of an ontology plays a pivotal role with LOD in achieving precision when searching for information.

The CIDOC Conceptual Reference Model was developed by the Documentation Committee of the International Council of Museums (CIDOC) as a standard for the exchange of information between cultural heritage organizations: museums, libraries, and archives. The CIDOC CRM is comprehensive, containing 82 classes and 263 properties, including classes to represent a wide variety of events, concepts, and physical properties. Furthermore, in includes many properties to represent relationships among entities. It is an ISO standard, ISO 21127:2014.

The following video illustrates mapping AAC data to the CIDOC CRM using the KARMA data integration tool.

Video of Using Karma to map museum data to the CIDOC CRM ontology

Access Raw, Open Museum Data on GitHub

In 2016, AAC Members began uploading raw, open data sets to the GitHub code sharing and publishing service, as a precursor to the process of mapping and converting to linked data.

You can access the data sets and project documentation at github.com/american-art

AAC Browse App Demo

Papers, Articles, and Presentations

This page brings together a selection of papers and presentations by members and advisers of the American Art Collaborative.

Papers and Articles

“Lessons Learned in Building Linked Data for the American Art Collaborative [PDF]” by Craig Knoblock et al. in the proceedings of the International Semantic Web Conference in Vienna, Austria, October 21–25, 2017

“Beyond the Hyperlink: Linked Open Data Creates New Opportunities,” Eleanor Fink, Museum (American Alliance of Museums), Jul/Aug. 2015

“How Linked Open Data Can Help in Locating Stolen or Looted Cultural Property,” Eleanor E. Fink, Pedro Szekely, and Craig A. Knoblock, Springer Verlag, EuroMed, November 2014

“Linked Open Data: The Internet and Museums”, Eleanor Fink, American Art Review, June 2014

“Linked Open Data: Interview with Eleanor Fink,” Visual Resources: an international journal on images and their uses, Volume 30, Issue 2, 2014

“Connecting the Smithsonian American Art Museum to the Linked Data Cloud [PDF]” by Pedro Szekely, Craig A. Knoblock, Fengyu Yang, Xuming Zhu, Eleanor E. Fink, Rachel Allen, and Georgina Goodlander, May 2013

“Art Clouds: Reminiscences and Prospects for the Future [PDF]” Talk by Eleanor E. Fink at Princeton University Digital World of Art History July 13, 2012

Presentations

Guerrilla Glue: Making Collaborative Digital Innovation Projects Stick

Presentation with Duane Degler, Krisen Regina, Karina Wratschko, Sara Snyder, Kate Blanch, Liz Ehrnst, and David Newbury, given at the 2017 Museum Computer Network conference, Pittsburgh, PA, November 10, 2017

American Art Collaborative

presentation by Eleanor Fink to “The Networked Curator: Association of Art Museum Curators Foundation Digital Literacy Workshop for Art Curators,” August 2017

Is Linked Open Data the way forward?

Presentation by Eleanor Fink, Shane Richey, Jeremy Tubbs, Rebecca Menendez, and Cathryn Goodwin, 2016 Museums and the Web, Los Angeles, CA, April 6-9, 2016

Report on the American Art Collaborative Project

Presentation by Shane Richey, Neal Johnson, and Kate Blanch given at 2015 Museum Computer Network conference in Minneapolis, MN, November 17, 2015

How Linked Open Data Helps Museums Collaborate, Reach New Audiences, and Improve Access to art Information Presentation by Eleanor E. Fink, INTERCOM 2015

The Blossoming of the Semantic Web

Panel presentation given at the 2013 Museum Computer Network conference in Montreal, Canada, November 21, 2013

Connecting the Smithsonian American Art Museum to the Linked Data Cloud

Slides for the “Connecting the Smithsonian American Art Museum to the Linked Data Cloud.” paper presented at the 10th Extended Semantic Web Conference (ESWC), in Montpellier, May 2013 Download the full paper [PDF]

Karma: Tools for Publishing Cultural Heritage Data in the Linked Open Data Cloud

This presentation shows how the Karma tool is used to publish data from cultural heritage databases to the linked data cloud. Karma supports conversion of data to RDF according to user-selected ontologies and linking to other datasets such as dbpedia.org

American Art Collaborative Goals

Presentation by Pedro Szekely, 2013

The Linked.Art Data Model

For information about the Linked.Art target model and community project, see http://Linked.Art

Below is a video of a presentation on the Linked.Art data model to the AAC members on June 15, 2017 by Rob Sanderson and David Newbury.

Video of the Introduction to the AAC / Linked Art Data Model